- AD

FS Configuration

ü

AD FS must be properly configured and integrated

with D365FO.

ü

The AOS (Application Object Server) uses AD FS

metadata to validate tokens.

ü

Ensure the AD FS XML configuration file is

accessible to AOS.

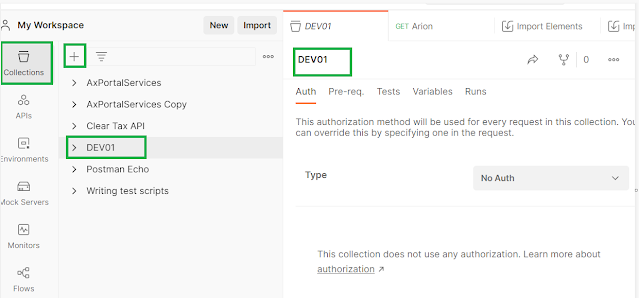

- Client

Application Setup

ü

External apps (e.g., Postman, .NET clients) must

be registered in AD FS.

ü

You’ll need:

ü

Client ID (from AD FS or Azure App Registration)

ü

Resource URI (typically the D365FO base URL)

ü

Token Endpoint (AD FS OAuth2 endpoint)

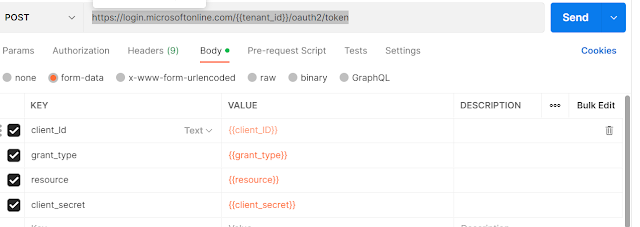

- Token

Acquisition

ü

Use OAuth2 protocol to acquire a bearer token.

ü

The token request includes:

§ grant_type=password

§ client_id

§ username

and password

§ resource

(D365FO URL)

ü

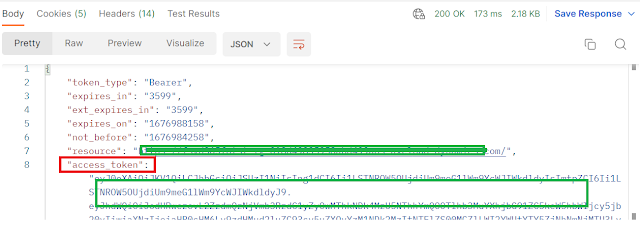

AD FS returns a JWT token if credentials are

valid.

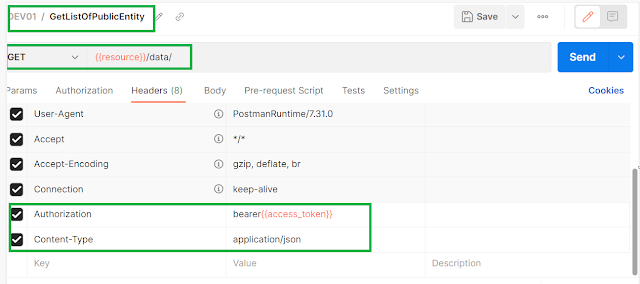

- Calling

OData

ü Include the token in the Authorization header: Authorization: Bearer <access_token>

ü Use standard OData URLs like: https://<your-d365fo-url>/data/Customers

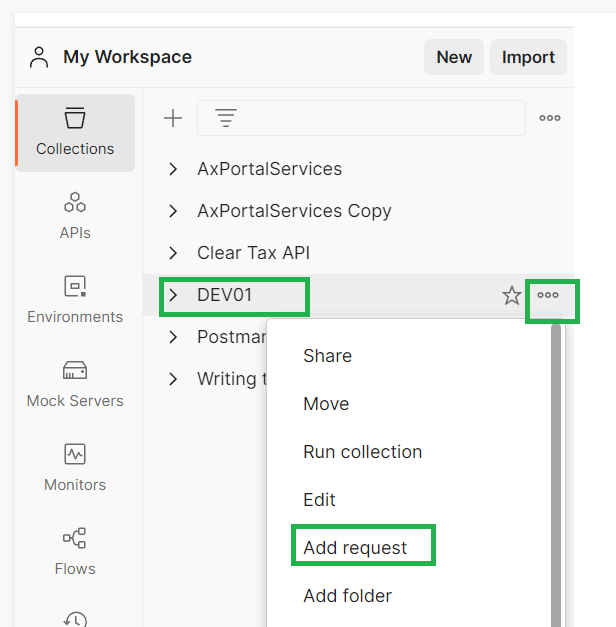

- Get

Token

ü POST

to AD FS token endpoint: https://<adfs-url>/adfs/oauth2/token

ü

Body (x-www-form-urlencoded):

client_id=<your-client-id>

username=<your-username>

password=<your-password>

grant_type=password

resource=https://<your-d365fo-url>

- Use

Token

ü

Add Authorization: Bearer<token> header to your OData request.

- Test

Endpoint

ü GET: https://<your-d365fo-url>/data/Customers

Token Expiry: Tokens typically expire after 1 hour. Refresh or reacquire as needed.

AD FS Clock Skew: Ensure time sync between AD FS and AOS servers.

SSL Certificates: AD FS endpoints must be secured with valid SSL certs.

User Permissions: The authenticated user must have access to the data entities.